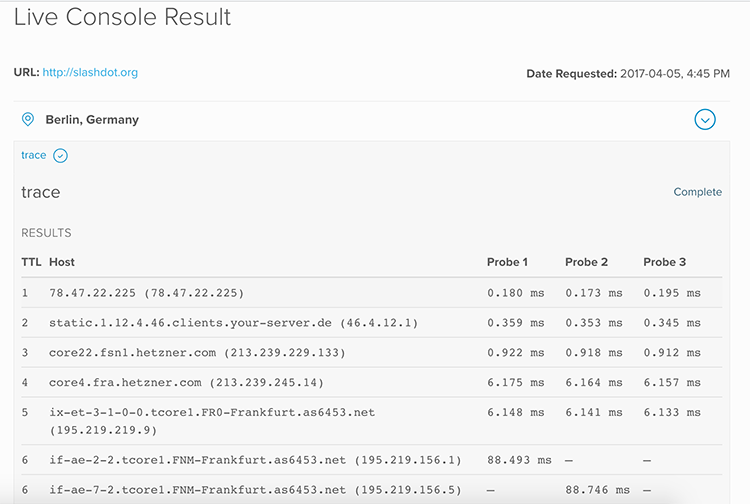

We shipped a small update to our monitoring platform Observ.io this week, showing the TTL (/hop) in traceroutes. It's but a small thing, but I'm really happy it landed, mostly because of the history here.

When I first launched Where's it Up, it looks like this:

I built the backend for exactly the problem I was solving. One of the things I wanted to do in the frontend was to highlight different hops (not display their count), so the results it generated included a flipping 0 and 1 so I could zebra-stripe my table. This legacy is still around, and shows up in the WIU response.

Getting this data into Observ.io involved ripping open a backend that's remained largely unchanged for years (pulling up history in git shows the file is older than the repo it lives in now), finding (and fixing) a few bugs that have been around for ages, and adding something much more helpful in it's place. The diff for the fixes show a lot of ripped out code, and something much easier to understand being dropped into place.

I'm really happy to see this code getting some love, and watch the errors of my past be slowly ground down. I'll be even happier when we can deprecate that 0/1.

Lately, programmers have been tweeting confessions of their inability to balance a binary tree on a whiteboard or remember the parameter order for various functions in response to the horrible interview processes at many companies. I've been lucky enough to avoid most of those interviews, but I thought it would be nice to call out some better interviews I've had over the last few years.

Freshbooks

I don't think I wrote a single line of code during this interview process. We discussed things on a whiteboard and I wrote some pseudo code. The problem I was addressing evolved over time and the conversation became more words and less drawing. For the "programming" portion, I was paired with one of their engineers. He sat at the keyboard and gave me a problem to solve in their actual codebase. Rather than being forced to navigate a foreign code base, figure out some editor, etc., I just said things like "I'd open up the template for that page", or "I'd jump to where that form was processed" and "Look at that function and its unit tests to see how it handles that". He navigated his codebase with ease, took the suggestions I gave him, and we fixed a bug. When it came time to write unit tests, we talked about the function we were looking at and agreed it didn't really need one.

By placing their engineer at they keyboard, rather than me, I felt like Freshbooks did a great job of learning if I could code without testing my memory of parameter order. Before I left Freshbooks, they were improving their interview process to provide gender parity on the part of the interviewers at each stage of the process, which seems like another good move.

Freshbooks is hiring in Toronto, no remotes.

Stripe

The first thing Stripe did when we set up an interview was send me a document describing their interview process and how I'd be evaluated. I thought this was quite helpful! If I'd had fewer interviews under my belt, I would have found it invaluable. The "phone screen" was me sharing my screen while I solved pre-defined problems in my language & editor of choice. The instructions I received ahead of time asked me to make sure I had the software required ready to go to write & run code. She encouraged me to Google, use documentation, etc., whenever I wished. When it came to the in person interviews I answered theoretical questions with drawings or pseudo code on a whiteboard. When it came to actually coding, I again wrote on my laptop, in my language of choice, Googling/reading documentation etc. as required without judgement. When I finished a problem a bit early, the interviewer and I discussed some next steps: making a webpage, adding extra data to the display, etc. We both agreed that asking a PHP developer to make a webpage wasn't actually going to be a challenge, so we ran with something else.

Stripe solved some of the problems differently than Freshbooks: by telling me in advance what I'd be doing, I was able to prepare. By letting me code in my own environment, and my own language, on my own computer, I really felt that they had set me up for success. As I left, I was given an iPad with an optional survey to take about the interview process and how they could improve.

Stripe is hiring in San Francisco, they have some remotes.

In Closing

Not all interviews are horrible, and there're ways to do them better. If your company is hiring, talk to recent hires and make sure your interview process isn't about to be the butt of someone's twitter joke. If you're about to interview somewhere Julia Evans has a set of great questions to ask in an interview.

disclaimer: I clearly worked for both Freshbooks and Stripe; I don't anymore. Neither of those links are affiliate/referral ones.

My mom helped me open my first bank account when I was 16. I remember the little book I could stick in the machine to get an update of all my transactions. These tended to be concentrated around Christmas and my Birthday, at least until I scored my first job at Walmart.

Since then Canada Trust was purchased by TD, I went to University, memorized my account number while working for eDonkey (USD cheques, quite the pain), moved to Montréal, back to Ontario, got married, and had 19 jobs I can remember. Throughout all that, as far as I can tell, my bank got steadily worse. Features I cared about (like being able to buy things up to my account balance with my debit card, setting my own cash withdrawal limits) went away, and fees climbed steadily.

About two years ago I thought the fees I was paying were out of control, so they moved me onto a fancy "unlimited" account, which included a bunch of things I'd been paying for individually, including my USD account, and some "overdraft protection" I never actually used. Three months later they "adjusted" their account offerings, and my "unlimited" account stopped including the other things I was using. This bumped my fees up by another $10/month. I tried to go back in and find a new account, but couldn't even get past the "Welcome" desk. The gatekeeper there told me I already had the best account, and wouldn't let me talk to anyone else in the branch.

I signed up for an account with ING Direct (an online only bank) many years ago for some basic savings. As my frustration with TD Canada Trust peaked, their ease of use, interest payments on accounts, and complete lack of fees struck a chord. I began moving all my transactions over. Somewhere long the way ING Direct became Tangerine. Cheque deposit with my cell phone, easy automatic savings programs, great stuff.

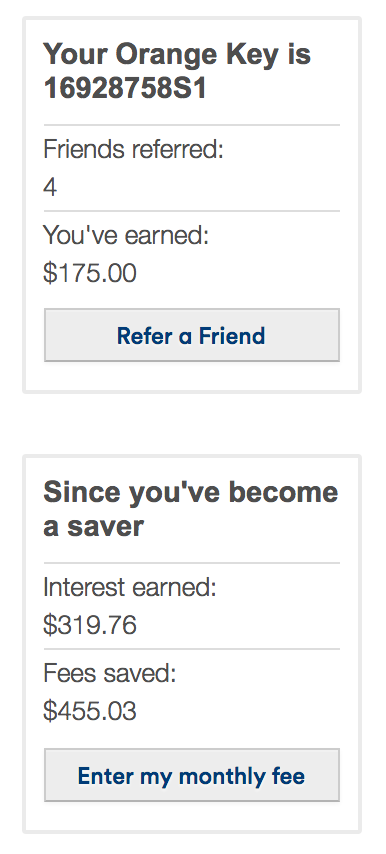

Tangerine has a neat feature where you enter your monthly fees at your old bank, and it counts those up over time. It's nice watching those numbers climb.

The only draw back to Tangerine is that they don't have a mechanism to receive wire transfers. During my tenure at Social and Scientific Systems I participated in their ESOP, which they pay out by wire. Getting paid out is a lengthy process, so despite departing in 2014 my disbursement didn't arrive until this week.

So today, about 20 years after opening the account I closed it forever. I'm saving over $300/year in fees, earning interest, saving more money with their easy automatic withdrawals, and actually enjoying their website. Would recommend! If you sign up today they'll give you $50 for free! My "Orange Key" is: 16928758S1.

WonderProxy broke two records this week: 200 servers, and highest single day revenue. This left me considering what advice I’d give to my past self or other people considering starting their own company:

Charge Money

This may not be the route to a billion dollar valuation in 18 months, but it’s a great way to lose less, and be able to count on yourself rather than external investors. WonderProxy launched with 8 servers, and my first prospective customer trying things out. They were paying us within a few weeks. Dollars in our pockets did a much better job of proving this was a viable business than friends saying “that sounds good”.

Free customers stay free

Over the years, we’ve had a number of customers on trial accounts or using alpha services come back with “we’d pay if only you did …”. Ignore them. You can implement that feature but they’ll never convert to paying customers. When paying customers make similar requests, pay attention, they’re much more likely to start paying you more.

Big companies pay slowly

Our model is pretty close to a SaaS: people pay up front, get access, then when they stop paying, the service goes away. When a big company comes along, they’re going to want a PO; legal is going to want changes to your terms of service; they’ll insist on Net 90; people will invite you to conference calls where you’ll do nothing more than read them part of your website & agree to email them something… It’s a disaster. Just grin and bear it. Part of the problem is that the person who wants your product has zero ability to manage any part of the process of paying you regardless of how much time or money your product can save them or the company. Accounts Payable has a process and if it’s a big company, it’s going to suck for vendors (you) and purchasers (your prospective user) alike.

Set a minimum for manual transactions

Some customers won’t want to use your nice automated systems, and end up requiring manual effort. Set a floor in terms of dollar value for manual transactions. It’s not worth it for us to generate an invoice, email it, wait for a cheque to arrive, run to the bank to deposit, and upgrade an account… all for $14.95. We try to restrict these steps to our high value plans, or ask customers interested in them to pre-pay for large blocks of time (6 months or a year) to cut down on the repetition.

Match expense periods to income

When we hired a long term contractor, we originally set it up to pay them once every four weeks. This was folly. Under that plan we’d end up needing to pay that contractor twice in a month (52/4 = 13). Our customers only pay us once in a month. So every month, we’d need to pay our contractor, and remember to set aside 1/12th of their fee for that special “two bills month”. Certainly over the course of a year the total cost would be the same, but it’s an easy way to run into cash flow problems.

Write down verbal agreements

When Will and I first started, he was just giving me a hand as I was in well over my head. After that continued for a while I “gave” him 20% of the company that couldn’t have existed without him. We had that conversation on Skype but we immediately wrote down our agreement in GChat or email. That became a pattern for us: discussing things frankly over Skype/Google Hangouts, then follow it up with a written copy. When the company incorporated, our previous agreements were formalized (and the equity distribution became more equitable).

The internet has witnessed several partner fights over the past few years. Written agreements, even in email, can be very helpful identifying who said what, and what the agreement made years ago was. Had either of us attempted to gain the upper hand, we would have had more than foggy memories of conversations to go on.

Currently members of the PHP community are considering adopting a code of conduct, this discussion is taking place on Internals, while a parallel conversation takes place on reddit. It largely seems to mirror the conversations we've seen roll through many communities over the past few years.

My opinion on these things has evolved over time. The implementation of some of the codes of conduct leaves me feeling wary, I could be accused of something, and be ejected and shunned by people with no background in investigation, or an ability to raise a defense. BUT that’s not what we’re seeing out there today. What we are seeing right now is a group of people we very much need involved in our communities being harassed, abused, and chased away by a few assholes. We need to attack the problems we have now first, in the future if we have new problems we can revise.

Comments disabled, there's a form down there, but it's a lie.

When I first started working on Where’s it Up API, I struggled with its pricing model. What should I charge? How should I organize customers? How much should I charge each group? etc. My friend Sean asked me if the tests people were running cost me anything. I replied that they didn’t; they were merely a rounding error in my network traffic. He suggested a flat-rate-per-month model. I made up a few plans, at reasonable price points in the $20-$60/month range, and launched. We’ve enjoyed a reasonable amount of revenue using this model.

Quite a bit has changed since then:

- Our network is larger

- The backend code has been completely refactored (moving from thousands of requests per day to millions will do that to you)

- We’ve managed to acquire a bunch of great customers.

Two other major adjustments have changed how I look at Where’s it Up:

- We have customers running more tests, faster, than I ever imagined. Where’s it Up users now create enough network traffic to represent a real number of dollars.

- We’ve expanded the number and type of tests we’re running, such that some are orders of magnitude more bandwidth- and CPU-intensive than anything we considered at launch. Compare: generating a screenshot of a web page, compressing it, and uploading it to AWS S3 (our shot test, powering ShotSherpa), to a single DNS lookup.

I tried to make the old pricing model work. We tiered some of our job types, limiting them to customers on even more expensive plans. Then we capped our plans at a total number of tests per month. Ultimately, the flat-rate model had two problems: customers couldn’t use some of the test types unless they were giving us $200/month, and running a million screenshots from our expensive Alaska server would cost us more than $200.

New Model

Our new pricing model is to sell credits, and charge a different number of credits for different test types. Running a quick DNS costs one credit, whereas a screenshot costs ten. This model allows us to offer every test type to every user, and to bill users more accurately for their usage.

Rather than giving every user one free month when they sign up, we’ll give them 10,000 free credits (at least until my accountant finds out). This is enough to:

- Confirm that one HTTP endpoint is accessible 10,000 times; or

- Confirm that one HTTP endpoint is accessible on every continent, every hour, for 35 days; or

- Check DNS results for your domain from 70 countries, daily, for the next 142 days; or

- Take a screenshot of your website every morning for the next 2.74 years

Conclusion

It’s too soon to call this a success, but I’m more happy with the credits model than I have been with anything else we tried or considered. It allows every customer to execute every job type, while ensuring we don’t sell more than we can support.

We just ran into an issue joining a new server to our MongoDB replica set powering Where’s it Up & ShotSherpa. We copied the data over to give it a good starting point, then added it to the replica set. We waited a while, it still hadn’t joined, looking at the tail of the log showed many connection lines, nothing telling. When I restarted MongoDB I saw:

replSet info self not present in the repl set configurationI tried pinging the server’s hostname to ensure it was successful. No problem. I tried connecting to the server using the mongo command line on the primary server (`mongo server.example.com:27017`). No problem.

…Eventually I copied the mongo hostname from the replica set configuration, and tried to use it to connect to mongo on the new secondary. No dice! As it turns out the NAT hadn’t been configured to allow that hostname to work locally. A few new entries in our /etc/hosts file later, and our server was joined successfully.

MongoDB Tip:

When MongoDB has trouble connecting, try the connection strings listed in the replica set config from all relevant hosts.

I’ve made plenty of mistakes in the code powering WonderProxy, perhaps most famously equating 55 with ∞ for our higher-level accounts (issues with unsigned tinyint playing a close second). Something I think I got right though, was the concept of “managed accounts”. It’s a simple boolean flag on contracts, and when it’s set, the regular account de-activation code is skipped.

Having this flag allows us to handle a few things gracefully:

- Large value contracts

By marking them as managed, they don’t expire because someone was on vacation when the account expired. They stay happy, the revenue continues, the expiry date remains accurate. - Contracts with tricky billing processes

The majority of our contracts pay us with PayPal or Stripe. A selection of contracts however have complex hoops involving anti-bribery policies, supplier agreements etc. This gives us some time to get this ironed out. - Contracts where we’ve failed to bill well

We occasionally make mistakes when billing our clients. When we’ve screwed up in the past, this helps ensure that there’s time for everything to resolve amicably.

This doesn’t mean the system has been without flaw. We currently get daily emails reporting on new signups, expired accounts, etc. It mentions all accounts that were not expired because of the managed flag.

Like most features, that was added after mistakes were made: we’d left some managed accounts unpaid for months. With better reporting, though, now, we couldn’t be happier with it.

We've made significant updates to the infrastructure supporting Where's it up this year. Many of these changes were necessitated by some quick growth from a few thousand tests per day to several million. Frankly without the first two, I'm not sure we could have remained stable much past a million tests per day.

MongoDB Changes

I blogged about our switch to MongoDB for Where’s it Up a while back, and we’ve been pretty happy with it. When designing our schema, I embraced the “schema free world” and stored all of the results inside a single document. This is very effective when the whole document is created at once, but it can be problematic when the document will require frequent updates. Our document process was something like this:

- User asks Where’s it up something like: Test google.com using HTTP, Trace, DNS, from Toronto, Cairo, London, Berlin.

- A bare bones Mongo document is created identifying the user, the URI, tests, and cities.

- One gearman job is submitted for each City-Test pair against the given URI. In this instance, that’s 12 separate jobs.

- Gearman workers pick up the work, perform the work, and update the document created earlier using $set

This is very efficient for reads, but that doesn’t match our normal usage: users submit work, and poll for responses until the work is complete. Once all the data is available, they stop. We’ve optimized for reads in a write heavy system.

The situation for writes is far from optimal. When Mongo creates a document, under exact fit allocation, it considers the size of the data being provided, and applies a padding factor. The padding factor maxes out at 1.99x. Because our original document is very small, it’s essentially guaranteed to grow by more than 2x, probably with the first Traceroute result. As our workers finish, each attempting to add additional data into the document, it will need to be moved, and moved again. MongoDB stores records contiguously on disk, so every time it needs to grow the document it must read it off disk, and drop it somewhere elsewhere. Clearly a waste of I/O operations.

It’s likely that Power of 2 sized allocations would better suit our storage needs, but they wouldn’t erase the need for moving entirely, just reduce the number of times it’s done.

Solution:Normalize, slightly. Our new structure has us storing the document framework in the results collection, and each individual work result from the gearman worker is stored in results_details in its own document. This is much worse for us on read: we need to pull in the parent document, then the child documents. On write, we’re saved the horrible moves we were facing previously. Again, the usage we’re actually seeing is: Submit some work, poll until done, read complete dataset, never read again. So this is much better overall.

We had some slight work to manage to make our front end handle both versions, but this change has been very smooth. We continue to enjoy how MongoDB handles replica sets, and automatic failover.

Worker Changes

We’ve recently acquired a new client which has drastically increased the number of tasks we need to complete throughout the day: checking a series of URLs using several tests, from every location we have. This forced us to re-examine how we’re managing our gearman workers to improve performance. Our old system:

- Supervisor runs a series of workers, no more than ~204. (Supervisor’s use of python’s select() limits it to 1024 file descriptors, which is allows for ~204 workers)

- Each worker, written in PHP, connects to gearman stating it’s capable of completing all of our work types

- When work arrives, the worker runs a shell command that executes the task on a remote server using a persistent ssh tunnel. Waits for the results, then shoves them into MongoDB.

This gave us a few problems:

- Fewer workers than we’d like, no ability to expand

- High memory overhead for each worker

- The PHP process spends ~99.9% of its time waiting, either for a new job to come in, or for the shell command it executed to complete.

- High load with 1 PHP process per executing job (that actually does work)

We examined a series of options to replace this, writing a job manager as a threaded Java application was seriously considered. It was eventually shot down due to the complexities of maintaining another set of packages, and due to the reduced number of employees who could help maintain it. Brian L Moon’s Gearman Manager was another option, but it left us running a lot of PHP we weren’t using. We could strip down PHP to make it smaller, but it wouldn’t solve all our problems.

Minimizing the size of PHP is pretty easy. Your average PHP install probably includes many extensions you’re not using on every request, doubly so if you’re looking at what’s required by your gearman workers. Look at ./configure --disable-all as a start.

Solution:Our solution involves some lesser-used functions in PHP: proc_open, stream_select, and occasionally posix_kill.

We set Gearman to work in a non-blocking fashion. This allows us to poll and check for available work without blocking until it becomes available.

Our main loop is pretty basic:

- Check to see if new work is available, and if so start it up.

- Poll currently executing work to see if there’s data available, if so record it.

- Check for defunct processes that have been executing for too long, kill those.

- Kill the worker if it's been running too long.

Check for available work

Available work is fired off using proc_open() (our work tends to look something like this:

sudo -u lilypad /etc/wheresitup/shell/whereisitup-trace.sh seattle wonderproxy.com). We save the read pipe for later access, and set it to non-blocking. We only allow each non-blocking worker to execute 40 jobs concurrently, though we plan to increase the limit after more testing.

Poll currently executing work for new data

Check for available data using stream_select() using a very low poll time. Some of our tests, like dig or host, tend to return the data in one large chunk; others, like traceroute, return data slowly over time. Longer running processes (traceroute seems to average 30 seconds) will have their data accumulated over time and appended together. If the read pipe is done (checked with feof()) close them off, and close the process (proc_close)

Check for defunct processes

We track the last successful read from each process, if it was more than 30 seconds ago we close all the pipes then recursively kill the process tree.

Kill the worker

Finally, the system keeps track of how much work it’s done. Once it hits a preset limit, it stops executing new jobs. Once its current work is done, it dies. Supervisor automatically starts a new worker.

Site Changes

Our original site wasn’t actually hugely problematic. We had used a large framework, which often left me feeling frustrated. I’ve lost days fighting with it, on everything from better managing 404s to handling other authentication methods. It was the former that lead to me rage-quitting the framework and installing Bullet. That said, we’re not afraid to pull in a library to solve specific problems, we’ve been happily using Zend_Mail for a long time.

Solution:We’ve re-written our site to use the Bullet framework. Apart from appreciating micro frameworks in generally, they’re particularly apt for delivering APIs. We’ve had great success writing tests under Bullet, and being able to navigate code in minutes rather than hours is an everlasting bonus. This leaves the code base heavily weighted to business logic, rather than a monolithic framework.

Yesterday either the city or Toronto Hydro went through and replaced the streetlights in my neighbourhood. This seemed good, keep the lights working. Then night fell, and while walking to bed I wondered why there was a car with xenon headlights on my front lawn pointing its headlights at my bathroom. That was the only logical reason I could think of for my bathroom to be so bright. It wasn't a car. It was the new streetlights. My entire street now looks like a sports field.

Here is Palmerston, a nice street with pretty street lights (much prettier than ours):

Here is Markham, with the sports field effect:

I took those two pictures on the same camera, with the same settings. I exported them both using the same white balance value. Full resolution options are posted at smugmug. I have emailed Toronto 311, who has forwarded me on to Toronto Hydro. In the meantime I'm going to need upgraded blinds, and to start wearing my sunglasses at night.

We recently expanded the number of disks for the raid on the main server handling Where’s it Up requests. Rebuilding that array took roughly 28 hours, followed by background indexing which took another 16 hours.

During the rebuild, the raid controller was doing its best to monopolize all the I/O operations. This left the various systems hosted on that server in a very constrained I/O state, iowait crested over 50% for many of them, while load breached 260 on a four core vm. Fun times.

To help reduce the strain we shut down all un-needed virtual machines, and demoted the local Mongo instance to secondary. Our goal here was to reduce the write load on the constrained machine. This broke the experience for our users on wheresitup.com.

We’ve got the PHP driver configured with a read preference of MongoClient::RP_NEAREST. This normally isn’t a problem, we’re okay with some slightly stale results, they’ll be updated in a moment. Problems can occur if the nearest member of the replica set doesn’t have a record at all when the user asks. This doesn’t occur during normal operations as there’s a delay between the user making the request, and being redirected to the results page that would require them.

Last night, with the local Mongo instance so backed up with IO operations, it was taking seconds not ms for the record to show up.

We shut that member of the replica set off completely, and everything was awesome. Well, apart from the 260 load.

Back in the fall of 2010 I was kicked out of my apartment for a few hours by my fantastic cleaning lady, and I wandered the streets of Montréal on Cloud 9. Not because of the cleaning mind you, but because I’d kissed Allison for the first time the night before, while watching a movie on my couch (Zombieland). Great night. My wanderings took me to the flagship Hudson’s Bay store in Montreal, and inevitably to the electronics department. I decided to check out the 3D televisions.

The first problem I ran into with the 3D TVs was the glasses, they didn’t work. I tried on the first pair (which had an ugly, heavy, security cable attaching them to a podium), no dice. I tried on the second pair, no luck there either. Looking at the glasses in more detail, I found a power button! Pushed, tried them on, nothing. Repeated the process on the other pair, still nothing. There I was, big electronics department, with a range of 3D TVs in front of me, and the glasses didn’t work.

If I was going to describe the target market for 3d televisions in 2010, I might have included a picture of myself. Male, 30, into technology, owns a variety of different entertainment products and consoles, decent disposable income. As far as I could tell I represented the exact person they were hoping would be the early adopters on these.

While wandering off I finally encountered a sales person, I mentioned that the glasses for the TVs didn’t work. He told me they were fine, and motioned for me to follow him. It turned out that you had to push and hold the power button down for a few seconds in order to turn them on. As I put them on the sales person walked away, and I got to enjoy a demo video in 3D.

Well, sort of. First the glasses had to sync with the television, then I was all set, great demo video on directly in front of me. Of course, I wasn’t in a room with a single television, there was several along the wall to the right and left of the central television, and since my glasses had sync’d with the one directly in front of me (not the others), the other televisions had essentially been turned into strobe lights. Incessantly blinking at me. When I turned my head towards the blinking lights the glasses re-sync’d with a different television, a disorienting procedure that allowed me to view it properly, but turned the one directly in front of me into a strobe light.

So, after requiring aid to put on a pair of glasses that were practically chained down, I was being forced to view very expensive televisions adjacent to a series of strobing pictures with an absentee salesman.

Despite all of the issues, this was really cool! This was 3D, for my living room! No red-blue glasses either, this was the real thing! Maybe I could get one, then invite Allison over again for a 3D MOVIE! Clearly owning impressive technology was the way to any woman’s heart. While those thoughts were racing through my mind I caught my own reflection in a mirror, the glasses were not pretty. If you picture a pair of 3d glasses in your head right now, you’re probably imagining the modern polarized set you get at the movie theatre. Designed to fit anyone, over prescription glasses, rather ugly so people don’t steal them. Those glasses were years away back in 2010, and these were active shutter glasses. Rather than just two panes of polarized plastic, they each lens was a small LCD panel capable of going from transparent to opaque and back many times a second. They looked a little like this, but in black:

As I put the glasses back on the presentation pedestal and rubbed my sore nose I realized: There was absolutely no way I could try to kiss a girl for the first time wearing a pair of those. I left the TVs behind, I think I picked up some apples I could slice and flambé to serve over ice cream instead.

The kissability test: When considering a product, could you imagine kissing someone you care about for the first time while using it.

Stuart McLean is a fantastic story teller. I’ve enjoyed his books immensely, but most of all, I’ve enjoyed listening to him tell me his stories on the radio, either directly through CBC Radio 2, or through the podcast made of the show. This past Christmas, I was gifted tickets to see and hear him live here in Toronto, a wonderful time.

When I listen to him on the radio, as his calming voice meanders through the lives in his stories, I often picture him. In my mind, he’s sitting in a library on overstuffed leather chair, with a tweed coat laid over the arm, a side table with a lamp and glass of water beside him… perhaps a large breed dog dozing at his feet. As each page came to an end he leisurely grasps the corner, sliding his fingers under the page, gently turning it, just as an avid reader moving through a treasured novel. This is the man I picture when I hear the measured voice regaling me with tales from the mundane to the fantastic.

This could not be further from the truth.

The man I saw at the Sony Centre for the Performing Arts has nothing in common with the man I pictured but the voice. As his story began, as he lapsed into those measured tones, his feet never stopped moving. He danced around the microphone like a boxer, stepping closer to make a point, jumping to the side in excitement, waving his arms in exclaim, always ready to strike us with another adverb. When he reached the bottom of each page, he’d frantically reach forward and throw it back, as eager as a child on Christmas morning. It’s easy to fall under the spell of a great storyteller, to stop seeing and only listen, but his fantastically animated demeanour shook that away, and spiced the story in ways I couldn’t have imagined over years of radio listening.

Listen to his podcast for a while, then go see him live, he’s an utter delight.

I’ve had a few valentines days over the years. I’ve spent far too much money, I’ve planned in exacting detail, I’ve left things until the last minute, and I’ve spent a fair few alone. This year, I wanted something special.

Just after Christmas Allison was kind enough to give me a hand knit sweater she’d been working on for over a year. It’s fantastic. Around the same time she’d commented that she was jealous of my neck warmer. An idea stuck! I’d knit her a neck warmer!

Small problem: I’ve never knit a thing in my life.

I’ve never let not knowing how to do something stop me before, and this didn’t seem like the time to start. I headed up to Ewe Knit, where Caroline was able to administer a private lesson. First I had to use a swift to turn my skien of yarn into a ball. They appear to sell yarn in a useless format to necessitate the purchase of swifts, a good gig if you can get it.

Once I had my nice ball of yarn I “casted on” a process I promptly forgot how to accomplish. The process mostly consisted of looping things around one of my knitting needles the prescribed number of times. That number was 17 according to the pattern. Once I’d casted on the regular knitting started, each row involved an arcane process where I attached a new loop to the loop on the previous row. The first few rows were quite terrifying, but eventually I slipped into a rhythm, and was quite happy with my progress by the time I’d made it to the picture shown below.

Once I had my nice ball of yarn I “casted on” a process I promptly forgot how to accomplish. The process mostly consisted of looping things around one of my knitting needles the prescribed number of times. That number was 17 according to the pattern. Once I’d casted on the regular knitting started, each row involved an arcane process where I attached a new loop to the loop on the previous row. The first few rows were quite terrifying, but eventually I slipped into a rhythm, and was quite happy with my progress by the time I’d made it to the picture shown below.

Just a few rows later I made a terrifying discovery: I’d invented a new form of knitting. Rather than knitting a boring rectangle, I was knitting a trapezoid, and there was a hole in it. My 17 stitch pattern was now more like 27. There was nothing for it but to pull it out and basically start over.

Just a few rows later I made a terrifying discovery: I’d invented a new form of knitting. Rather than knitting a boring rectangle, I was knitting a trapezoid, and there was a hole in it. My 17 stitch pattern was now more like 27. There was nothing for it but to pull it out and basically start over.

Several hours, and many episodes of The Office later, I’d slipped into a great rhythm, and developed a mild compulsion to count after every row to ensure I had 15 stitches. The neck warmer was looking great! Just another season of The Office, and some serious “help” from the cat, and I’d be finishing up.

Several hours, and many episodes of The Office later, I’d slipped into a great rhythm, and developed a mild compulsion to count after every row to ensure I had 15 stitches. The neck warmer was looking great! Just another season of The Office, and some serious “help” from the cat, and I’d be finishing up.

I headed back to Ewe Knit for instructions on casting off, where I tied off the loops I’d been hooking into with each row. Then I sewed the ends together, and wove in my lose ends. A neck warmer was born!

I headed back to Ewe Knit for instructions on casting off, where I tied off the loops I’d been hooking into with each row. Then I sewed the ends together, and wove in my lose ends. A neck warmer was born!

This felt like a success before I’d even wrapped it. I’d spent a lot of time working on something she’d value, I’d learned more about one of her hobbies, and gained new appreciation for the sweater she’d knit for me. She just needed to unwrap it.

She loved it.

WonderProxy will be announcing availability of a new server in Uganda any day now. We’re very excited. When we first launched WonderProxy the concept of having a server anywhere in Africa seemed far-fetched. Uganda is shaping up to be our fifth.

Our provider asked us to pay them by wire transfer, so I dutifully walked to the bank, stood in line, then paid $40CAD in fees & a horrible exchange (to USD) rate to send them money. Not a great day, so I grabbed a burrito on the way home. A few days later we were informed that some intermediary bank had skimmed $20USD off our wire transfer, so our payment was $20USD short. Swell.

In order to send them $20USD, I’d need to go back to the bank, stand in line, hope I got a teller who knew how to do wire transfers (the first guy didn’t), buy $20USD for the provider, $20USD for the intermediate bank, and pay $40CAD for the privilege. $80 to send them $20. Super.

Luckily XE came in to save the day again. Using their convenient online interface I was able to transfer $40USD for only $63CAD, including my wire fee. I paid a much better exchange rate, lower wire fees, and didn’t have to put pants on. The only downside was a lack of burrito. Bummer.

If you’re dealing with multiple currencies and multiple countries, and these days it’s incredibly likely that you are, I’d highly recommend XE.